Building with large language models used to mean picking one API and writing your own scaffolding. Now, it means something much more powerful, working with intelligent agents that collaborate, reason, and adapt. This is the core of a new generation of platforms: the multi agent LLM stack.

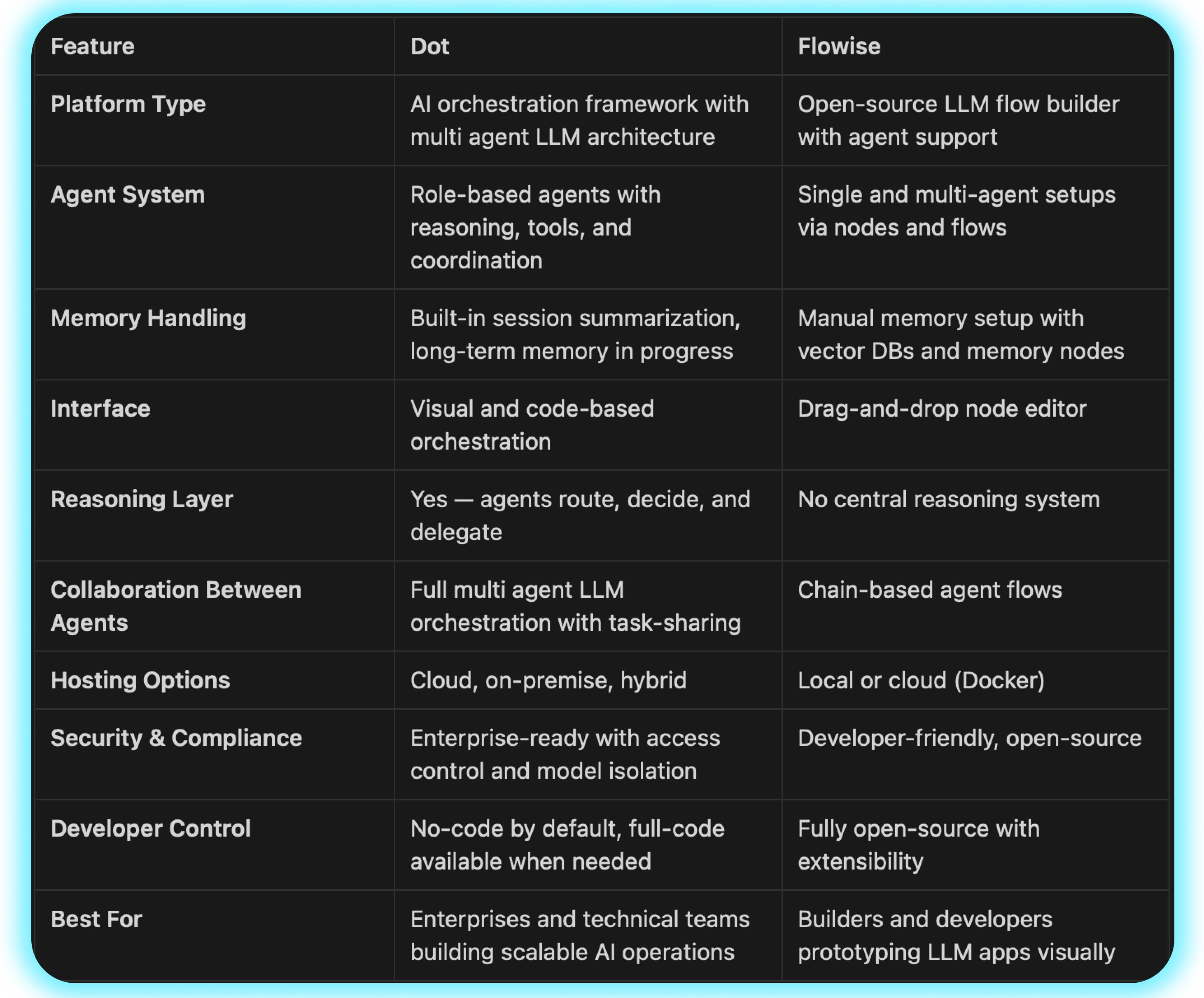

Dot and Flowise are both in this category. They help teams create and manage AI workflows. But when it comes to scale, orchestration, and enterprise readiness, the differences quickly show.

Let’s break down how they compare and why Dot may be the stronger foundation if you’re serious about building with multi agent LLM tools.

Visual Flow Meets Structured Architecture

Flowise is open-source and built around a visual, drag-and-drop interface. It lets you build custom LLM flows using agents, tools, and models. Developers can create chains for Q&A, summarization, or chat experiences by connecting nodes on a canvas.

Dot also supports visual creation, but its agent architecture is layered and role-based. Each agent in Dot is more than a node — it’s a decision-making unit with memory, reasoning, and tools. Instead of building long chains, you assign responsibilities. Agents coordinate under a Reasoning Layer that decides who does what, and when.

If your team wants to build scalable, explainable workflows with logic embedded in agents, Dot offers a deeper approach to multi agent LLM orchestration.

Try Dot now — free for 3 days.

Agent Roles and Reasoning Depth

Flowise supports both Chatflow (for single-agent LLMs) and Agentflow (for orchestration). You can connect multiple agents, give them basic tasks, and build workflows that mimic human-like coordination. But most decisions still live inside the flow itself like conditional routing or manual logic setup.

Dot was built from day one to support reasoning-first AI agents. System prompts define how agents behave. You don’t need long conditional logic chains just assign the task, and the agent makes decisions using internal logic and shared memory.

This makes Dot a better choice for teams building real business processes where workflows grow, evolve, and require flexibility.

Multi Agent LLM Collaboration

Here’s where the difference becomes clearer: both tools support agents, but only Dot supports true multi agent LLM collaboration.

In Flowise, you build agent chains by linking actions. In Dot, agents talk to each other. A Router Agent might receive a query and delegate it to a Retrieval Agent and a Validator Agent. These agents interact through structured reasoning layers like a team with a manager, not just blocks on a canvas.

This is especially useful for enterprise-grade workflows like:

- Loan approval pipelines

- Sales document automation

- IT ticket classification with exception handling

Dot treats AI agents like teammates, that means with memory, logic, and shared tools. Few multi agent LLM tools take collaboration this far.

Memory and Context Handling

Flowise lets you pass context through memory nodes. You can set up Redis, Pinecone, or other vector DBs to retrieve and store context. This works well but requires manual setup for each agent or node.

Dot automates this process. It uses session summarization by default and converting full chat histories into compact memory snippets. These summaries are then used in future sessions, saving tokens and keeping context sharp.

Coming soon, Dot will support long-term memory and cross-session retrieval across agents. That’s a major step forward for scalable multi agent LLM systems.

Deployment and Integration

Flowise can be deployed locally or in the cloud and integrates with tools like OpenAI, Claude, and even Hugging Face models. As an open-source platform, it gives full flexibility. It’s great for small teams or experimental use cases.

Dot supports cloud, on-premise, and hybrid deployments, each tailored for enterprise compliance needs. It also comes with pre-built integrations for Slack, Salesforce, Notion, and custom APIs. Dot is made for secure environments, with support for internal model hosting and multi-layer access control.

For enterprises, Dot’s integration and deployment options make it a safer, more scalable choice.

Feature Comparison Table

Developer Flexibility and Control

Flowise shines in flexibility. As an open-source project, it’s great for those who want to customize flows deeply. You can fork it, extend it, and self-host. Its community is active and helpful, especially for solo developers and small teams.

Dot is no-code by default but code when you want it. You can edit agent logic, prompt flows, and integrations directly. More importantly, developers don’t have to rewrite logic in every flow. With Dot, you define once, reuse everywhere, a big win for engineering speed and consistency.

If you’re evaluating serious orchestration tools beyond prototypes, check out our full Dot vs. CrewAI comparison to see how Dot handles complex agent collaboration compared to other popular frameworks.

Try Dot: Built for Enterprise AI Orchestration

Flowise is an impressive platform for building with LLMs visually, especially if you want full flexibility and are ready to manage the details.

But if your team needs smart agents that think, collaborate, and scale across departments, Dot brings structure to the chaos. With reasoning layers, built-in memory, and deep orchestration, Dot makes multi agent LLM systems practical in real enterprise settings.

Try Dot free for 3 days and see how quickly you can build real workflows, not just prototypes.

Frequently Asked Questions

Is Flowise suitable for enterprise-level multi agent LLM use cases?

Flowise works well for prototyping and visual agent flows, but it lacks the orchestration, memory, and compliance depth required by most enterprises managing complex multi agent LLM systems.

What makes Dot better than Flowise for developers?

Dot combines a code-optional interface with multi agent LLM architecture, long-term memory, and reasoning layers — giving developers more control without sacrificing usability.

Can Dot handle production workloads at scale?

Yes. Dot supports cloud, on-prem, and hybrid deployment with cost optimization strategies, secure model hosting, and modular workflows — ideal for scalable enterprise use.